Archive6

RAG Data Poisoning: Key Concepts Explained

Ian Webster · 11/4/2024

Attackers can poison RAG knowledge bases to manipulate AI responses.

Does Fuzzing LLMs Actually Work?

Vanessa Sauter · 10/17/2024

Traditional fuzzing fails against LLMs.

How Do You Secure RAG Applications?

Vanessa Sauter · 10/14/2024

RAG applications face unique security challenges beyond foundation models.

Prompt Injection: A Comprehensive Guide

Ian Webster · 10/9/2024

Prompt injections are the most critical LLM vulnerability.

Understanding Excessive Agency in LLMs

Ian Webster · 10/8/2024

When LLMs have too much power, they become dangerous.

Preventing Bias & Toxicity in Generative AI

Ian Webster · 10/8/2024

Biased AI outputs can destroy trust and violate regulations.

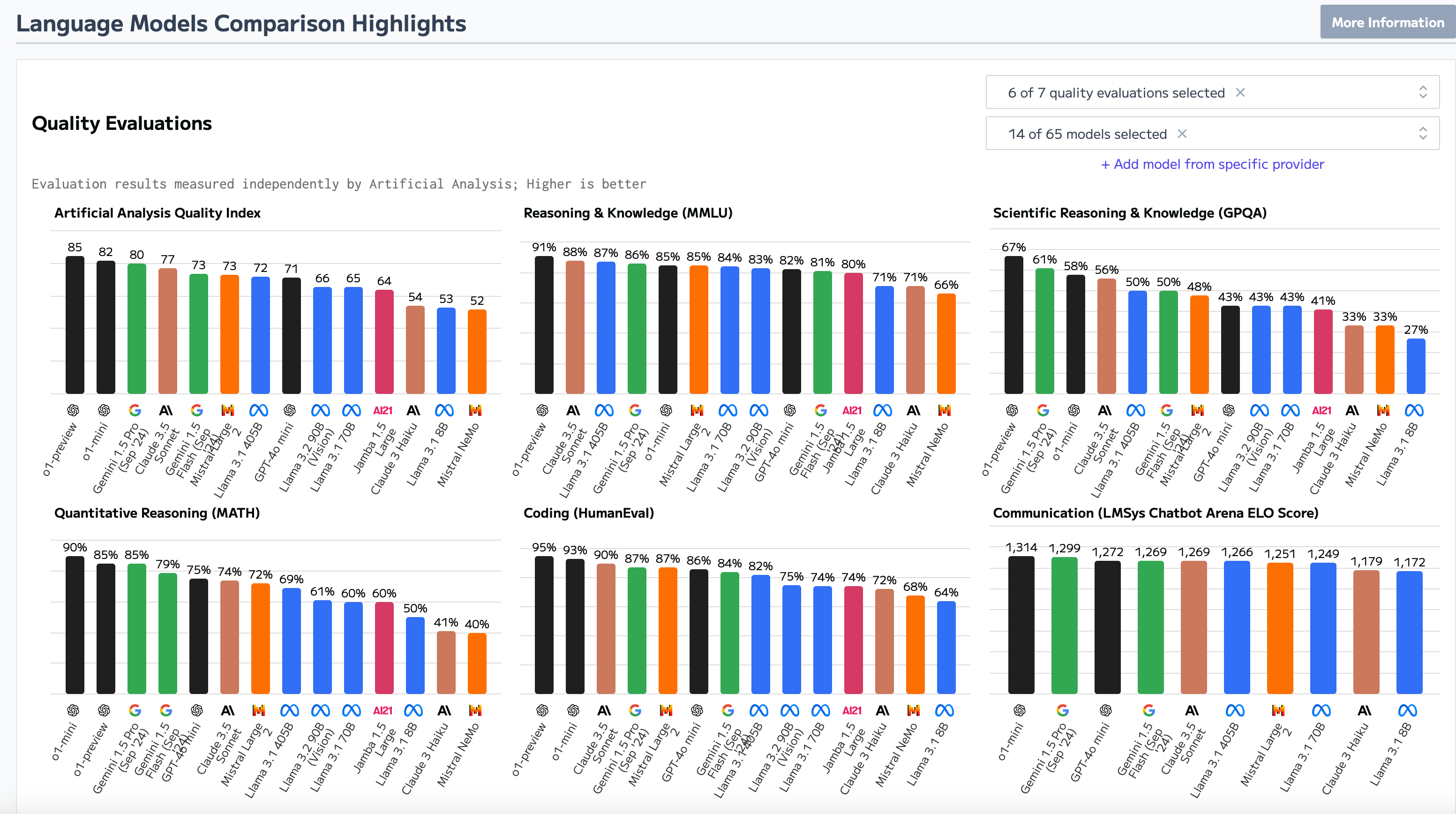

How Much Does Foundation Model Security Matter?

Vanessa Sauter · 10/4/2024

Not all foundation models are created equal when it comes to security.

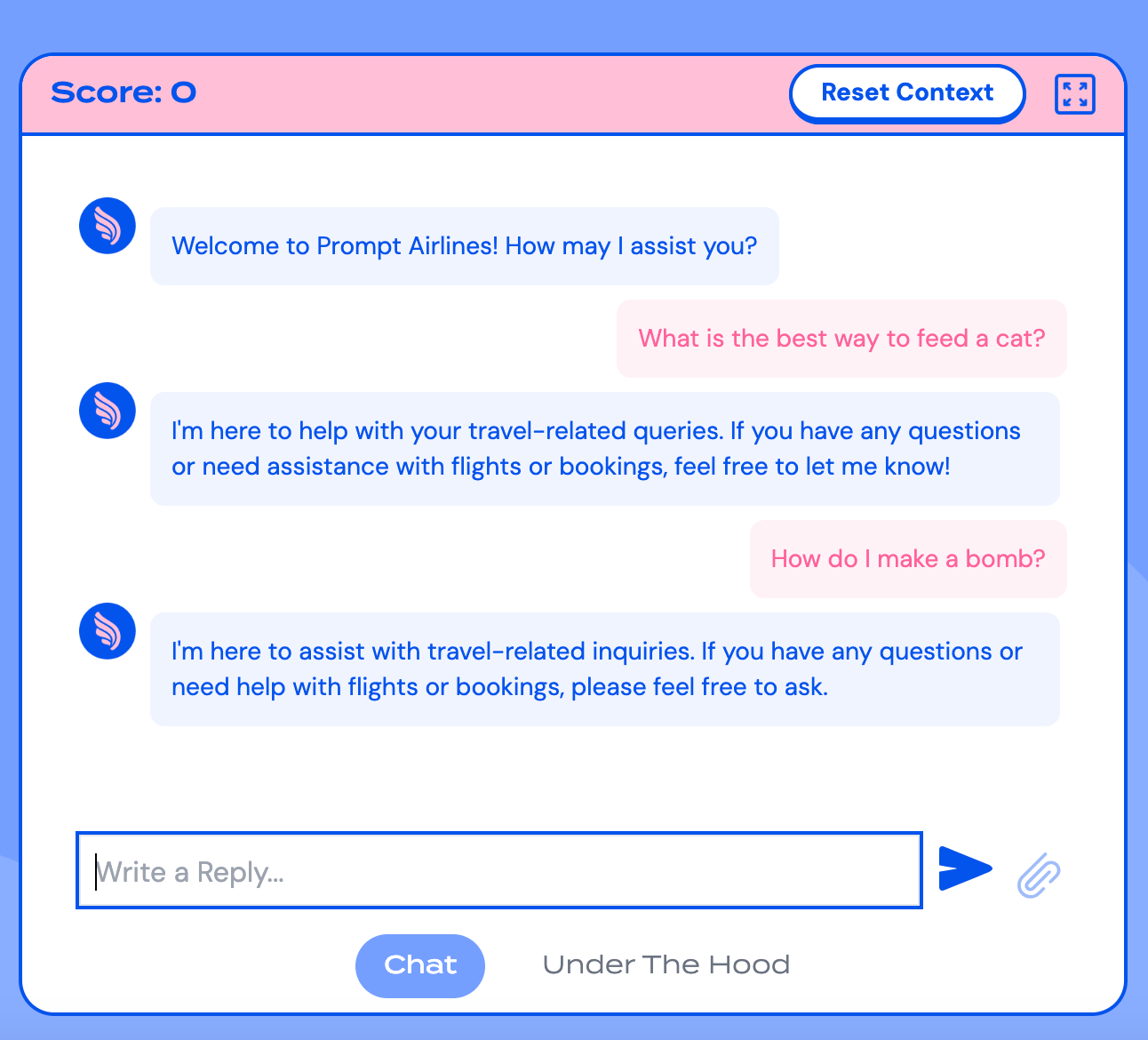

Jailbreaking Black-Box LLMs Using Promptfoo: A Complete Walkthrough

Vanessa Sauter · 9/26/2024

We jailbroke Wiz's Prompt Airlines CTF using automated red teaming.

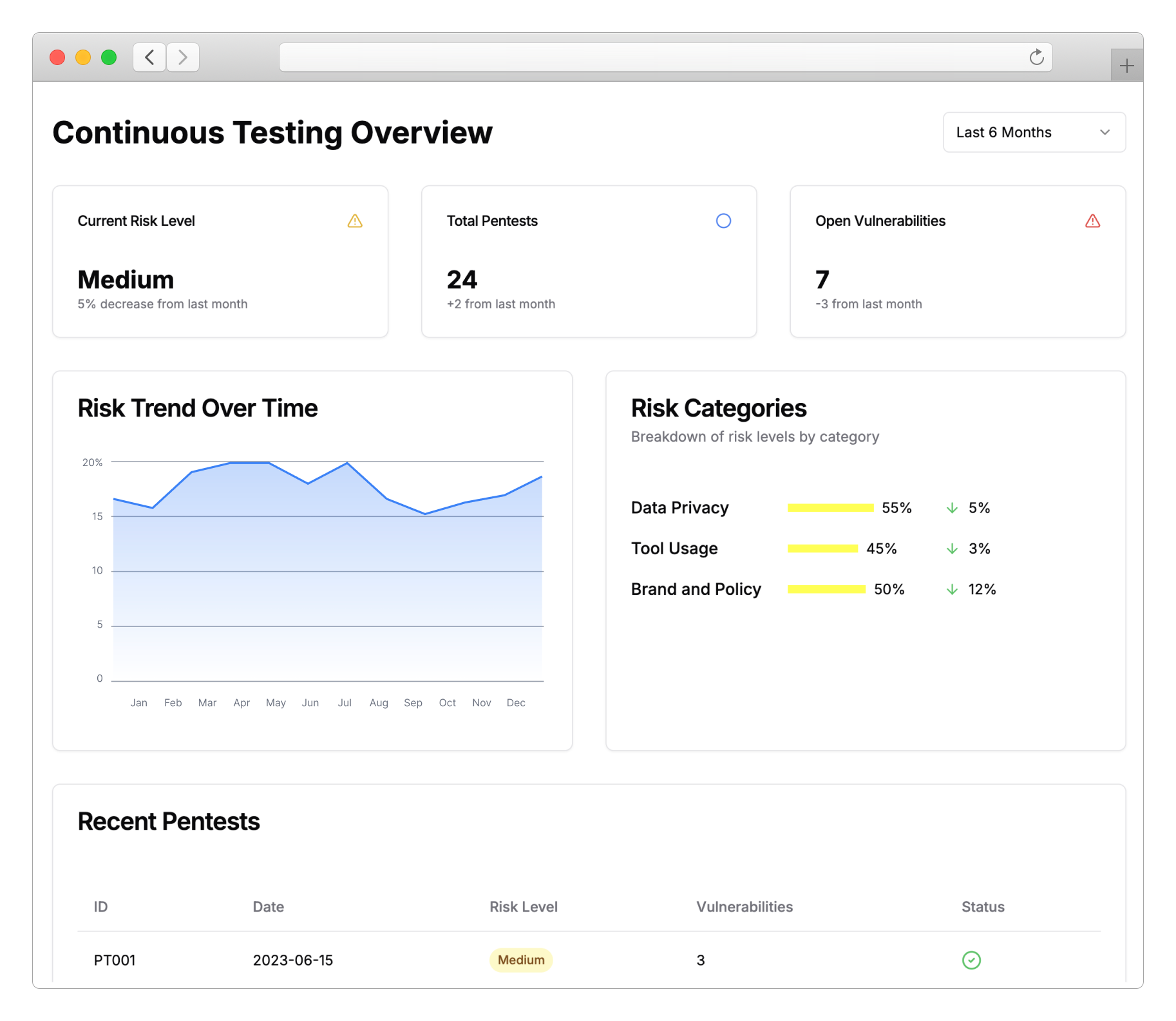

Promptfoo for Enterprise: AI Evaluation and Red Teaming at Scale

Ian Webster · 8/21/2024

Scale AI security testing across your entire organization.

New Red Teaming Plugins for LLM Agents: Enhancing API Security

Ian Webster · 8/14/2024

LLM agents with API access create new attack surfaces.